Consider the Family of Functions Given by H(T) = At2 T âë†â€™ B Where a > 0 and B > 0.

Understanding GRU Networks

![]()

In this commodity, I will try to requite a adequately simple and understandable explanation of one actually fas c inating type of neural network. Introduced by Cho, et al. in 2014, GRU (Gated Recurrent Unit) aims to solve the vanishing slope problem which comes with a standard recurrent neural network. GRU tin too exist considered as a variation on the LSTM because both are designed similarly and, in some cases, produce equally excellent results. If you are not familiar with Recurrent Neural Networks, I recommend reading my brief introduction. For a ameliorate agreement of LSTM, many people recommend Christopher Olah'southward article. I would also add this paper which gives a articulate distinction betwixt GRU and LSTM.

How practice GRUs piece of work?

Equally mentioned in a higher place, GRUs are improved version of standard recurrent neural network. But what makes them and then special and effective?

To solve the vanishing slope trouble of a standard RNN, GRU uses, and so-called, update gate and reset gate. Basically, these are two vectors which decide what information should be passed to the output. The special thing most them is that they can be trained to keep information from long ago, without washing it through fourth dimension or remove data which is irrelevant to the prediction.

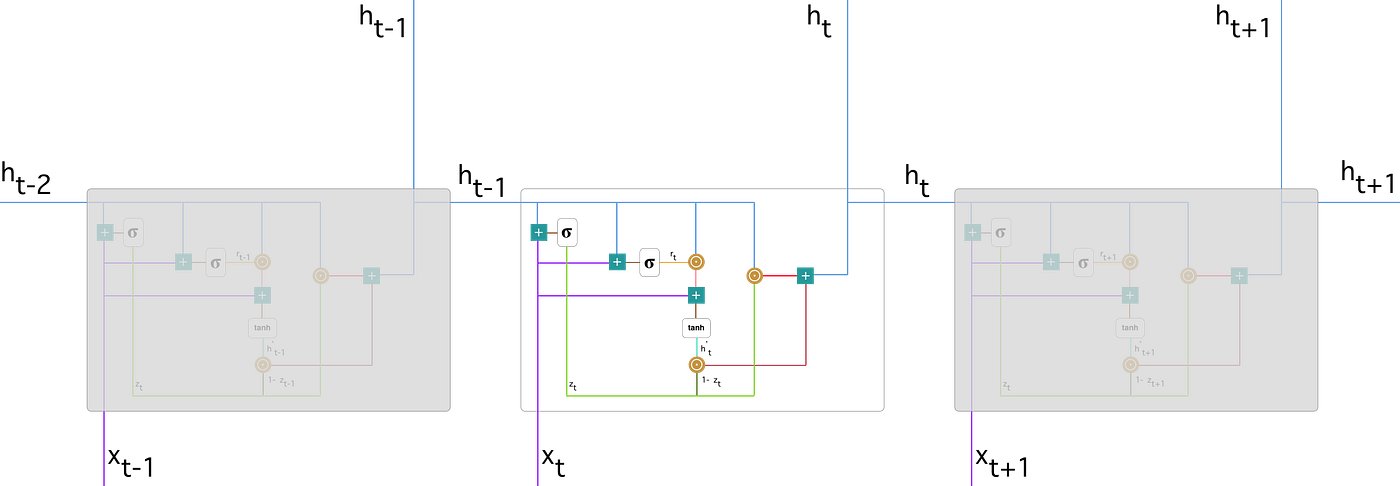

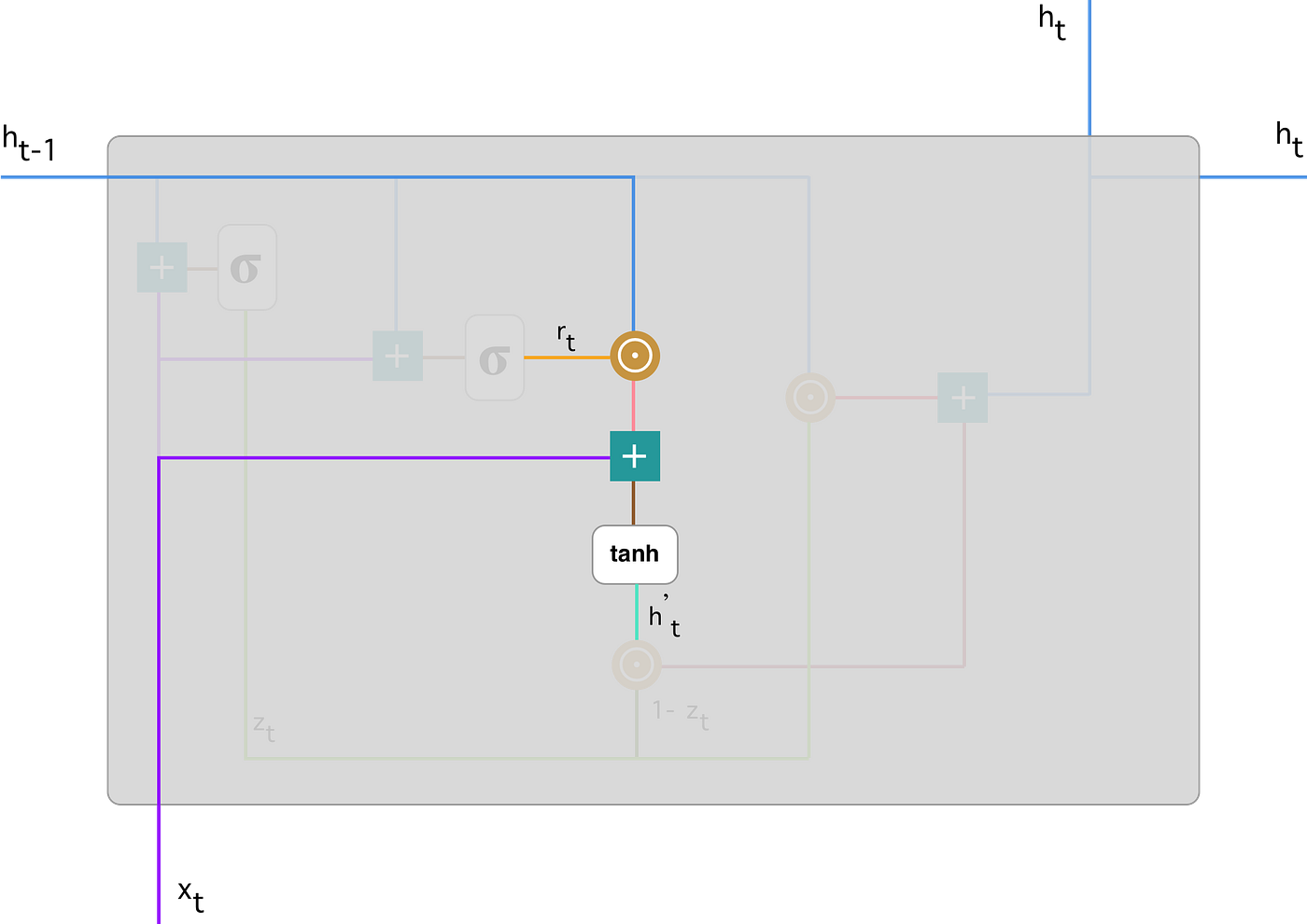

To explicate the mathematics behind that process we will examine a single unit from the following recurrent neural network:

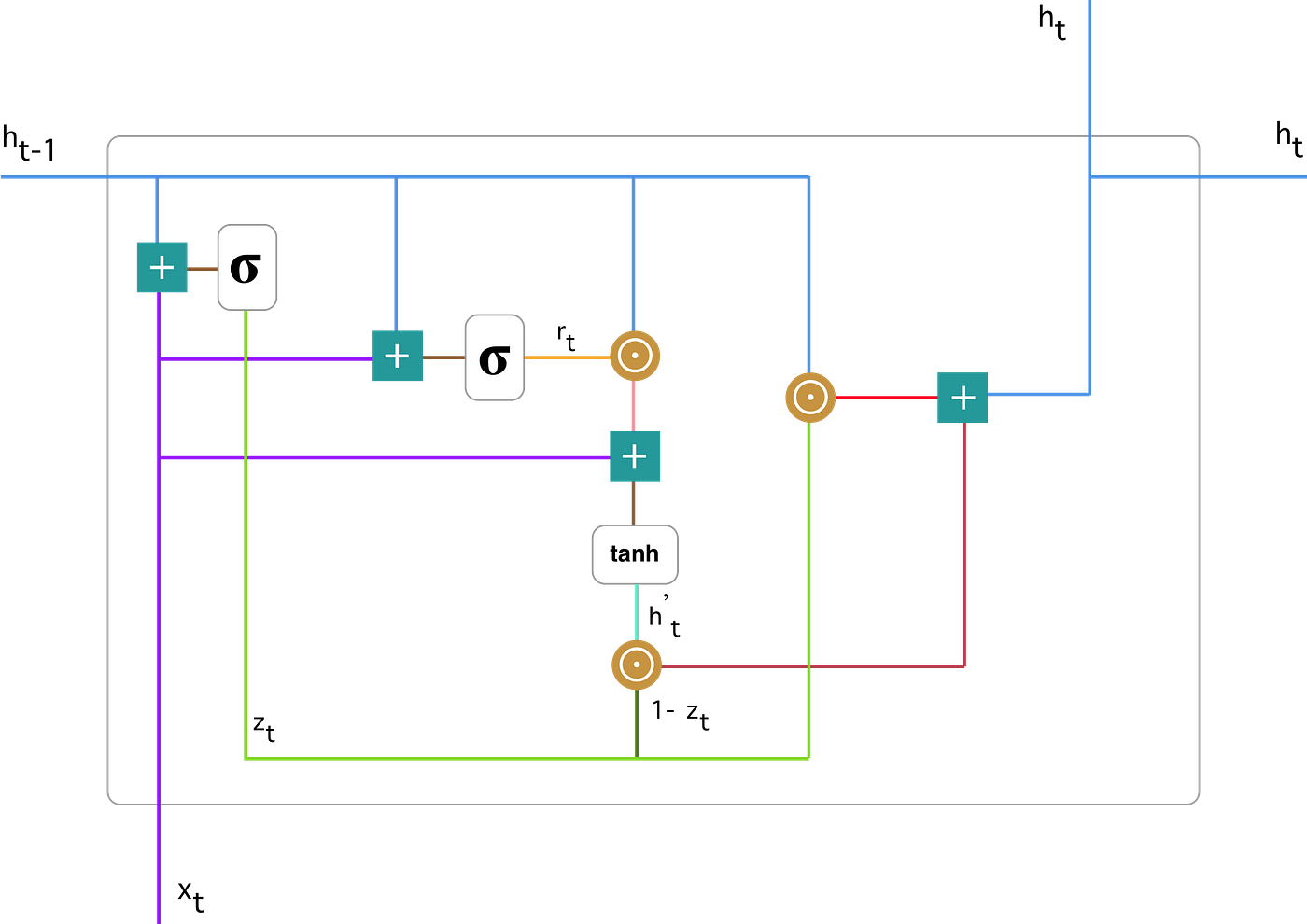

Here is a more detailed version of that unmarried GRU:

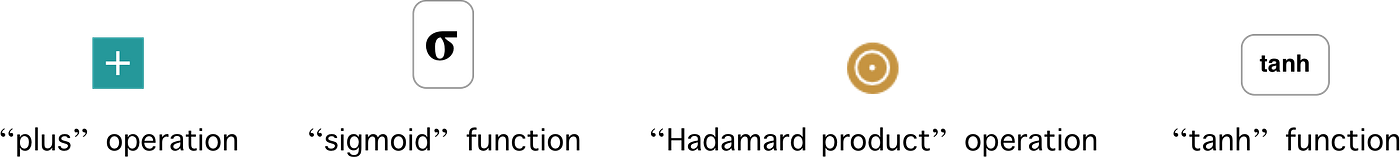

First, allow's introduce the notations:

If you are not familiar with the above terminology, I recommend watching these tutorials about "sigmoid" and "tanh" function and "Hadamard production" performance.

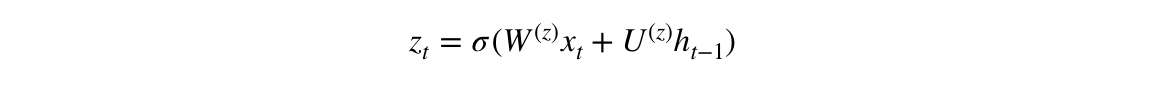

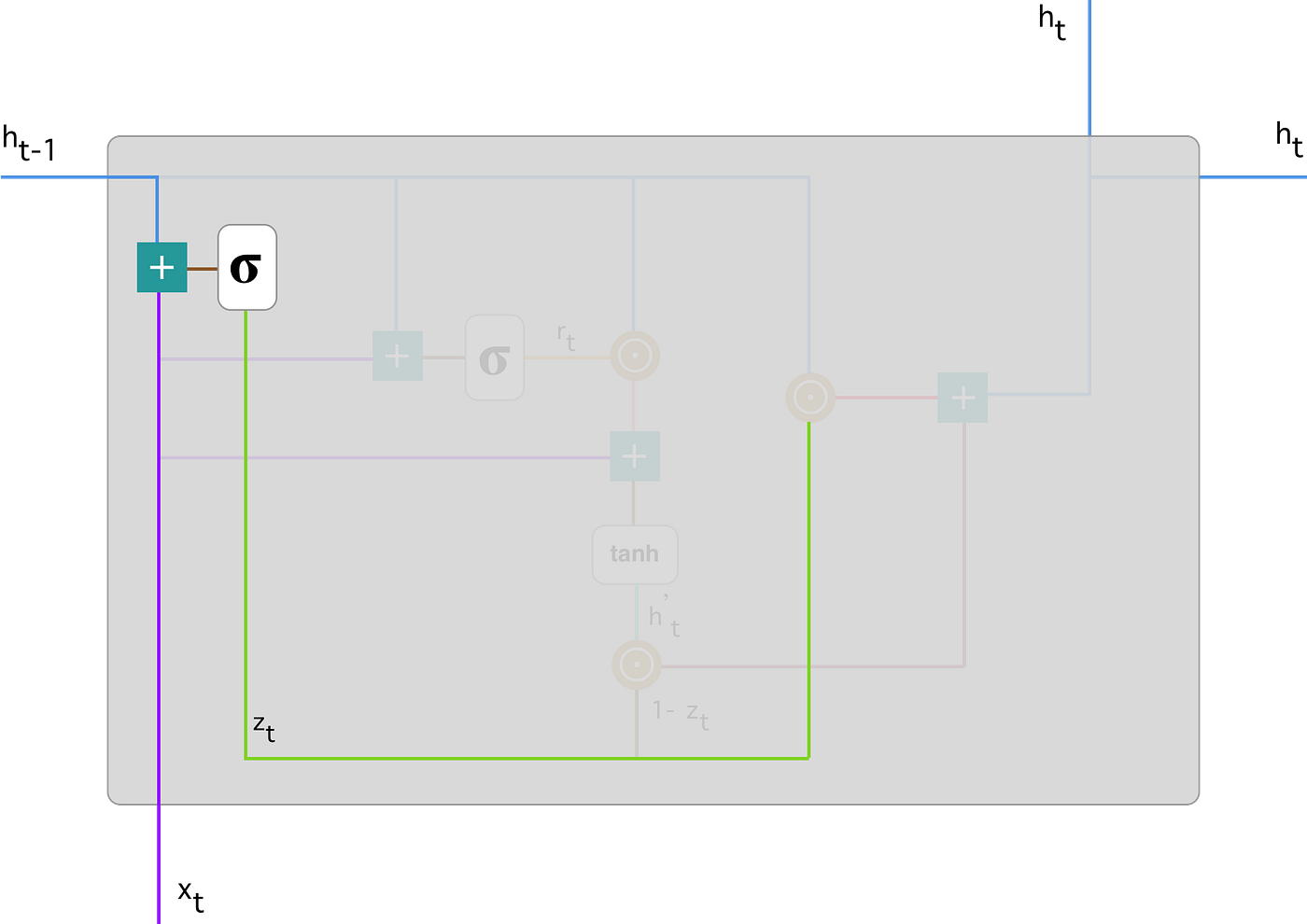

#1. Update gate

Nosotros outset with calculating the update gate z_t for time step t using the formula:

When x_t is plugged into the network unit of measurement, information technology is multiplied by its own weight W(z). The same goes for h_(t-i) which holds the information for the previous t-1 units and is multiplied by its ain weight U(z). Both results are added together and a sigmoid activation role is applied to squash the result between 0 and ane. Following the above schema, we have:

The update gate helps the model to make up one's mind how much of the past information (from previous time steps) needs to be passed forth to the time to come. That is actually powerful considering the model can determine to re-create all the information from the past and eliminate the take chances of vanishing gradient trouble. We volition come across the usage of the update gate later on. For at present remember the formula for z_t.

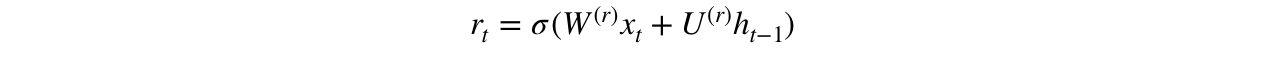

#two. Reset gate

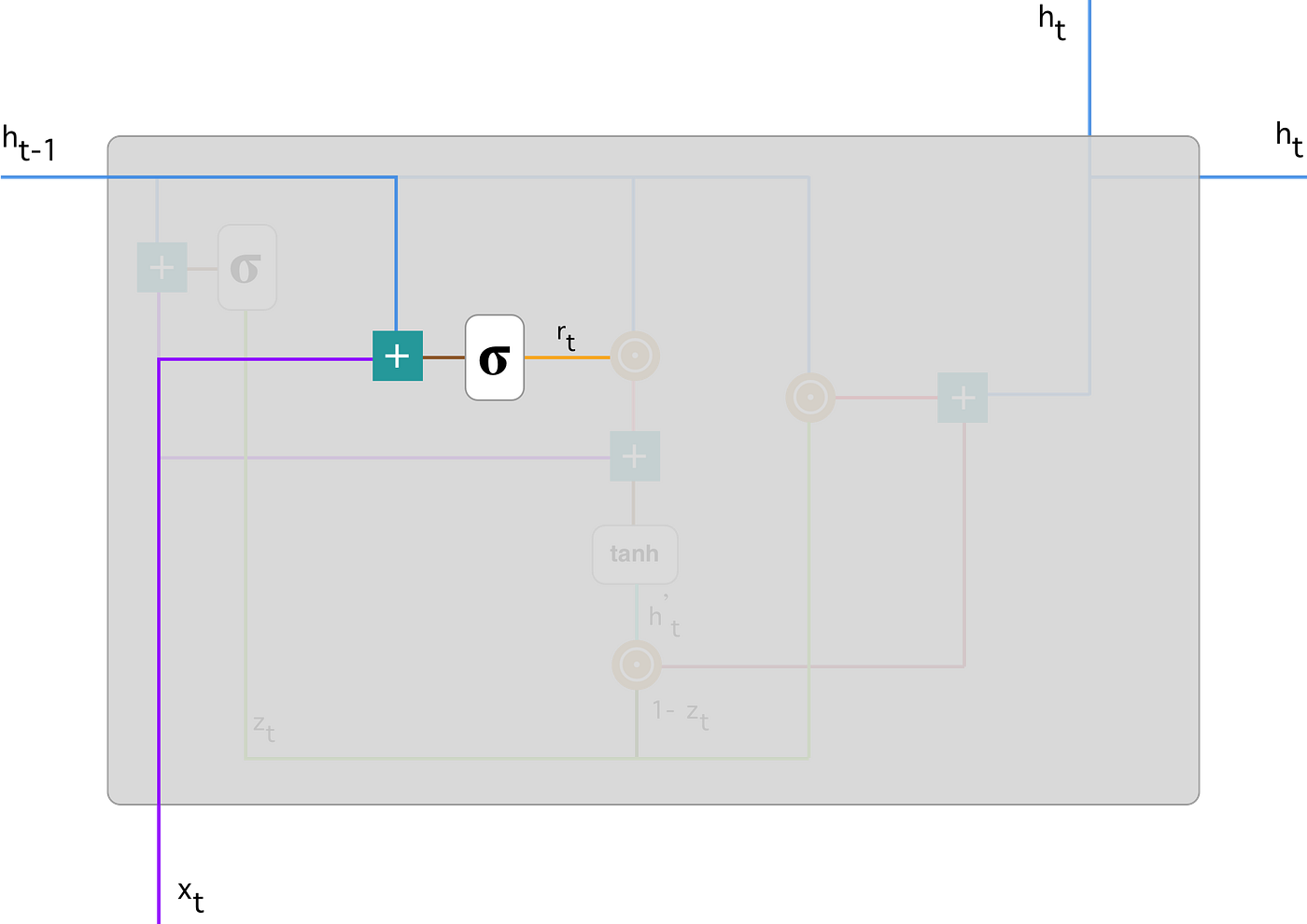

Essentially, this gate is used from the model to determine how much of the past information to forget. To calculate it, we utilise:

This formula is the same as the one for the update gate. The difference comes in the weights and the gate's usage, which will see in a fleck. The schema beneath shows where the reset gate is:

As before, we plug in h_(t-i) — blue line and x_t — imperial line, multiply them with their corresponding weights, sum the results and apply the sigmoid function.

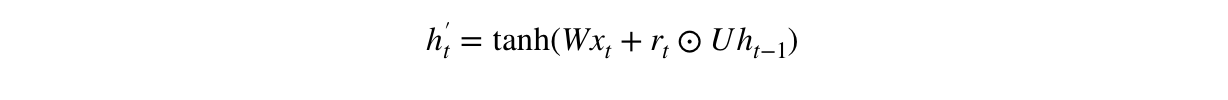

#3. Current memory content

Permit's see how exactly the gates will affect the concluding output. First, we offset with the usage of the reset gate. We introduce a new retentivity content which will use the reset gate to store the relevant information from the past. Information technology is calculated as follows:

- Multiply the input x_t with a weight Westward and h_(t-1) with a weight U.

- Summate the Hadamard (element-wise) product betwixt the reset gate r_t and Uh_(t-1). That will determine what to remove from the previous time steps. Permit's say nosotros take a sentiment assay problem for determining 1'south stance about a book from a review he wrote. The text starts with "This is a fantasy book which illustrates…" and later a couple paragraphs ends with "I didn't quite savor the book because I think information technology captures too many details." To make up one's mind the overall level of satisfaction from the volume we only demand the last part of the review. In that case equally the neural network approaches to the end of the text it volition learn to assign r_t vector shut to 0, washing out the past and focusing only on the final sentences.

- Sum upwardly the results of step 1 and 2.

- Apply the nonlinear activation role tanh.

You can clearly meet the steps here:

We do an element-wise multiplication of h_(t-1) — bluish line and r_t — orangish line and and so sum the result — pink line with the input x_t — imperial line. Finally, tanh is used to produce h'_t — bright dark-green line.

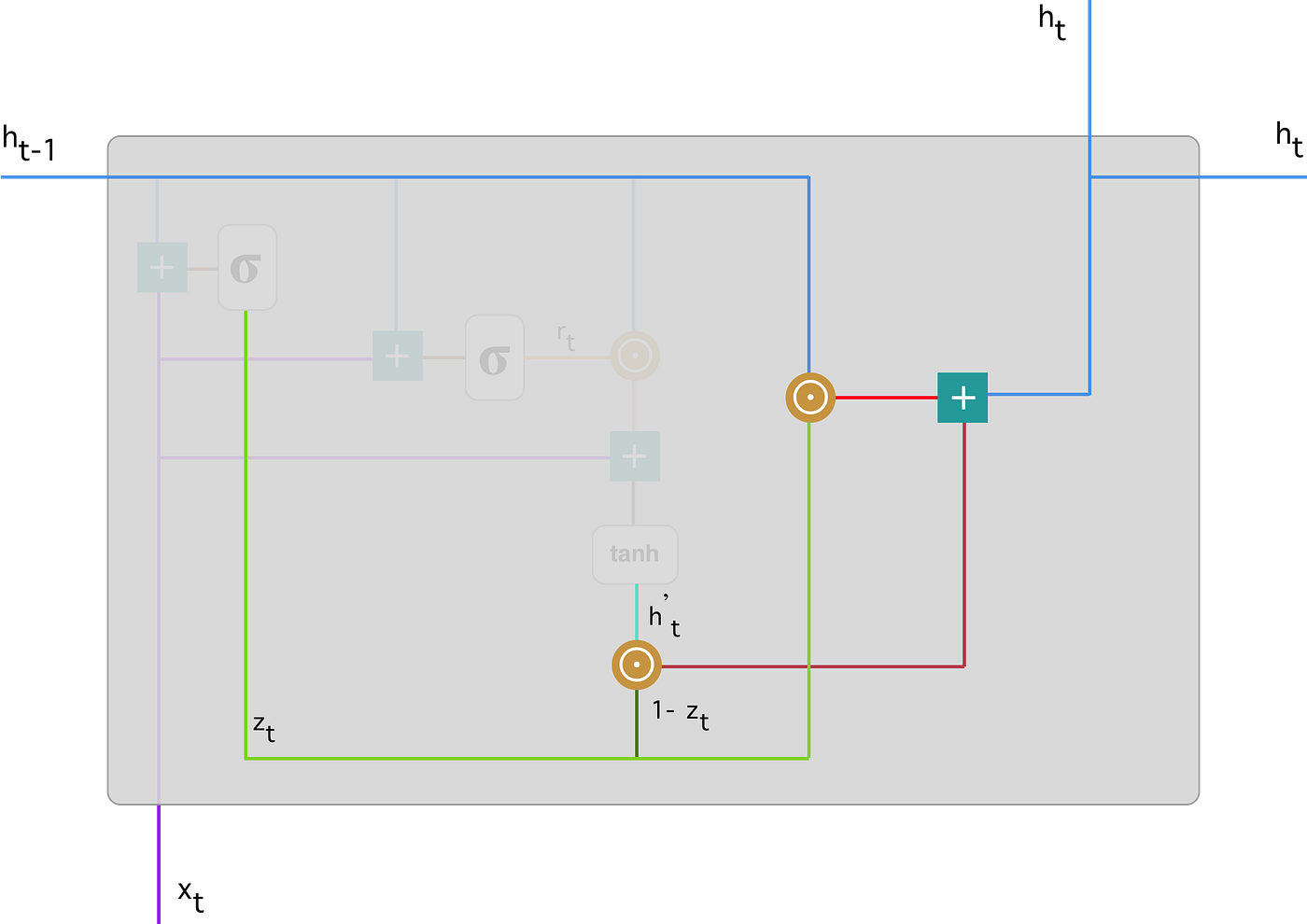

#4. Final memory at electric current fourth dimension step

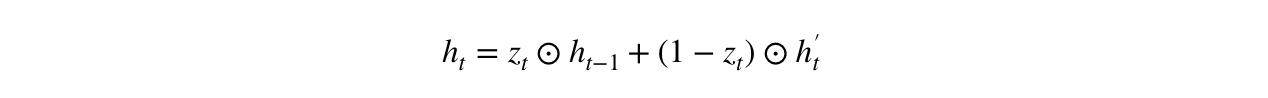

Every bit the last step, the network needs to calculate h_t — vector which holds data for the current unit and passes information technology down to the network. In order to do that the update gate is needed. It determines what to collect from the electric current memory content — h'_t and what from the previous steps — h_(t-1). That is done every bit follows:

- Utilise element-wise multiplication to the update gate z_t and h_(t-1).

- Employ element-wise multiplication to (1-z_t) and h'_t.

- Sum the results from step ane and 2.

Let'south bring up the example about the book review. This time, the most relevant information is positioned in the beginning of the text. The model can learn to ready the vector z_t close to i and go along a majority of the previous information. Since z_t will be shut to 1 at this time footstep, 1-z_t will be shut to 0 which volition ignore big portion of the current content (in this example the last office of the review which explains the book plot) which is irrelevant for our prediction.

Here is an analogy which emphasises on the above equation:

Post-obit through, you can encounter how z_t — greenish line is used to calculate 1-z_t which, combined with h'_t — vivid green line, produces a result in the dark ruby-red line. z_t is likewise used with h_(t-1) — blue line in an element-wise multiplication. Finally, h_t — blue line is a result of the summation of the outputs corresponding to the bright and dark red lines.

Now, you lot can see how GRUs are able to store and filter the information using their update and reset gates. That eliminates the vanishing gradient problem since the model is not washing out the new input every single fourth dimension but keeps the relevant information and passes it down to the next fourth dimension steps of the network. If carefully trained, they can perform extremely well even in complex scenarios.

I promise the article is leaving you armed with a better understanding of this state-of-the-art deep learning model called GRU.

For more AI content, Follow me on LinkedIn.

Cheers for reading. If you lot enjoyed the article, give it some claps 👏 . Hope you have a not bad twenty-four hours!

claassentrier1945.blogspot.com

Source: https://towardsdatascience.com/understanding-gru-networks-2ef37df6c9be

0 Response to "Consider the Family of Functions Given by H(T) = At2 T âë†â€™ B Where a > 0 and B > 0."

Enregistrer un commentaire